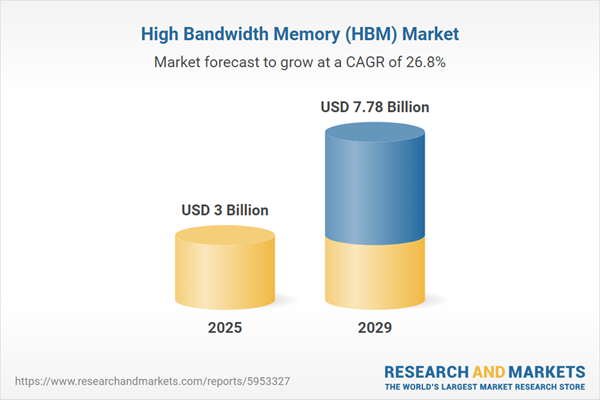

The high bandwidth memory (HBM) market size has grown exponentially in recent years. It will grow from $2.36 billion in 2024 to $3 billion in 2025 at a compound annual growth rate (CAGR) of 27.3%. The growth in the historic period can be attributed to advancements in graphics processing units (GPUs), emergence of big data and analytics, expansion of artificial intelligence (AI) and machine learning (ML), Increasing complexity of workloads, and demand in high-performance computing (HPC).

The high bandwidth memory (HBM) market size is expected to see exponential growth in the next few years. It will grow to $7.78 billion in 2029 at a compound annual growth rate (CAGR) of 26.8%. The growth in the forecast period can be attributed to 5G network rollout, rapid growth in edge computing, increased adoption of internet of things (IoT), artificial intelligence and deep learning advancements, and growing virtual reality (VR) and augmented reality (AR) Market. Major trends in the forecast period include integration in autonomous vehicles, expansion in data center applications, advancements in gaming industry, enhancements in mobile devices, and innovations in wearable technology.

The increasing demand for high-performance computing (HPC) is expected to propel the growth of the high-bandwidth memory (HBM) market going forward. High-performance computing (HPC) refers to the use of advanced computing technologies and systems to perform complex and demanding tasks at speeds and scales beyond the capabilities of traditional computing. High-bandwidth memory (HBM) is used in high-performance computing to provide faster and more efficient access to data for processors by stacking memory dies vertically on a single package, allowing for significantly increased bandwidth and reduced latency, which enhance the overall computational performance of data-intensive applications. For instance, in January 2023, the Department of Energy, a US-based government department, announced a $1.8 million investment in six projects to improve energy efficiency and productivity within the manufacturing sector by leveraging high-performance computing (HPC) resources available at the U.S. National Laboratories. These initiatives utilized HPC capabilities to address manufacturing challenges, optimize processes, and contribute to a cleaner energy future, focusing on reducing carbon emissions in steelmaking, enhancing additive manufacturing for reduced CO2 emissions, and optimizing battery manufacturing for electric vehicles. Therefore, the increasing demand for high-performance computing (HPC) is driving the growth of the high-bandwidth memory (HBM) market.

Leading companies in the high-bandwidth memory market are prioritizing the development of innovative products, such as sustainable processors for data centers, to boost performance and energy efficiency. These sustainable processors are crafted to maximize energy savings and reduce the environmental footprint of data centers. For example, in January 2023, Intel Corporation, a U.S.-based semiconductor manufacturer, introduced its 4th Gen Xeon Scalable processors (codenamed Sapphire Rapids), the Xeon CPU Max Series (Sapphire Rapids HBM), and the Data Center GPU Max Series (Ponte Vecchio). These products offer substantial improvements in data center performance, efficiency, and security, along with new capabilities in AI, cloud, networking, edge computing, and high-performance supercomputing.

In February 2022, Advanced Micro Devices Inc., a US-based semiconductor company, acquired Xilinx Inc. for approximately $35 billion. The acquisition of Xilinx has significantly strengthened AMD’s position in the high-performance and adaptive computing market, providing a comprehensive portfolio of leadership CPUs, GPUs, FPGAs, and adaptive SoCs, allowing AMD to address a broader market opportunity across cloud, edge, and intelligent devices. Xilinx Inc. is a US-based semiconductor and technology company that develops flexible processing platforms for a variety of technologies along with high bandwidth memory.

Major companies operating in the high bandwidth memory (HBM) market are Samsung Electronics Co. Ltd, Intel Corporation, International Business Machines (IBM) Corporation, Qualcomm Incorporated, SK Hynix Inc., Fujitsu Limited, Micron Technology Inc., Nvidia Corporation, Toshiba Corporation, Advanced Micro Devices Inc., Western Digital Corporation, STMicroelectronics SA, Renesas Electronics Corporation, Powerchip Technology Corporation, Cypress Semiconductor Corporation, Nanya Technology Corporation, Macronix International Co., Ltd., Silicon Motion Technology Corporation, Transcend Information Inc., Integrated Silicon Solution Inc. (ISSI), Adata Technology Co. Ltd., Netlist Inc., Open Silicon Inc., Micronet Ltd., Winbond Electronics Corporation.

North America was the largest region in the high bandwidth memory (HBM) market in 2024. Asia-Pacific is expected to be the fastest-growing region in the forecast period. The regions covered in the high bandwidth memory (HBM) market report are Asia-Pacific, Western Europe, Eastern Europe, North America, South America, Middle East, Africa. The countries covered in the high bandwidth memory (HBM) market report are Australia, Brazil, China, France, Germany, India, Indonesia, Japan, Russia, South Korea, UK, USA, Canada, Italy, Spain.

The high bandwidth memory market consists of sales of graphics processing units, data center accelerators, networking equipment and field-programmable gate arrays (FPGAs). Values in this market are ‘factory gate’ values, that is the value of goods sold by the manufacturers or creators of the goods, whether to other entities (including downstream manufacturers, wholesalers, distributors and retailers) or directly to end customers. The value of goods in this market includes related services sold by the creators of the goods.

The market value is defined as the revenues that enterprises gain from the sale of goods and/or services within the specified market and geography through sales, grants, or donations in terms of the currency (in USD, unless otherwise specified).

High bandwidth memory is a computer memory interface designed for 3D-stacked synchronous dynamic random-access memory (SDRAM) to provide significantly increased bandwidth compared to traditional memory technologies. It is utilized alongside high-performance graphics accelerators, network devices, AI ASICs in high-performance data centers, on-package cache within CPUs, on-package RAM in forthcoming CPUs, as well as in FPGAs and specific supercomputers.

The main memory type of high-bandwidth memory are hybrid memory cube (HMC), and high-bandwidth memory (HBM). High bandwidth wide PIM (processing-in-memory) is a type of high-performance memory architecture that integrates processing elements directly within the memory, enhancing data processing speed and efficiency. The various type involved are HBWPIM, HBM3, HBM2E, AND HBM2 which consists of applications such as servers, networking, consumer, automotive and other applications.

The main memory type of high-bandwidth memory are hybrid memory cube (HMC), and high-bandwidth memory (HBM). High bandwidth wide PIM (processing-in-memory) is a type of high-performance memory architecture that integrates processing elements directly within the memory, enhancing data processing speed and efficiency. The various type involved are HBWPIM, HBM3, HBM2E, AND HBM2 which consists of applications such as servers, networking, consumer, automotive and other applications.

The revenues for a specified geography are consumption values that are revenues generated by organizations in the specified geography within the market, irrespective of where they are produced. It does not include revenues from resales along the supply chain, either further along the supply chain or as part of other products.

This product will be delivered within 3-5 business days.

Table of Contents

Executive Summary

High Bandwidth Memory (HBM) Global Market Report 2025 provides strategists, marketers and senior management with the critical information they need to assess the market.This report focuses on high bandwidth memory (hbm) market which is experiencing strong growth. The report gives a guide to the trends which will be shaping the market over the next ten years and beyond.

Reasons to Purchase:

- Gain a truly global perspective with the most comprehensive report available on this market covering 15 geographies.

- Assess the impact of key macro factors such as conflict, pandemic and recovery, inflation and interest rate environment and the 2nd Trump presidency.

- Create regional and country strategies on the basis of local data and analysis.

- Identify growth segments for investment.

- Outperform competitors using forecast data and the drivers and trends shaping the market.

- Understand customers based on the latest market shares.

- Benchmark performance against key competitors.

- Suitable for supporting your internal and external presentations with reliable high quality data and analysis

- Report will be updated with the latest data and delivered to you along with an Excel data sheet for easy data extraction and analysis.

- All data from the report will also be delivered in an excel dashboard format.

Description

Where is the largest and fastest growing market for high bandwidth memory (hbm)? How does the market relate to the overall economy, demography and other similar markets? What forces will shape the market going forward? The high bandwidth memory (hbm) market global report answers all these questions and many more.The report covers market characteristics, size and growth, segmentation, regional and country breakdowns, competitive landscape, market shares, trends and strategies for this market. It traces the market’s historic and forecast market growth by geography.

- The market characteristics section of the report defines and explains the market.

- The market size section gives the market size ($b) covering both the historic growth of the market, and forecasting its development.

- The forecasts are made after considering the major factors currently impacting the market. These include:

- The forecasts are made after considering the major factors currently impacting the market. These include the Russia-Ukraine war, rising inflation, higher interest rates, and the legacy of the COVID-19 pandemic.

- Market segmentations break down the market into sub markets.

- The regional and country breakdowns section gives an analysis of the market in each geography and the size of the market by geography and compares their historic and forecast growth. It covers the growth trajectory of COVID-19 for all regions, key developed countries and major emerging markets.

- The competitive landscape chapter gives a description of the competitive nature of the market, market shares, and a description of the leading companies. Key financial deals which have shaped the market in recent years are identified.

- The trends and strategies section analyses the shape of the market as it emerges from the crisis and suggests how companies can grow as the market recovers.

Scope

Markets Covered:

1) By Memory Type: Hybrid Memory Cube (HMC); High-Bandwidth Memory (HBM)2) By Type: HBWPIM; HBM3; HBM2E; HBM2

3) By Application: Servers; Networking; Consumer; Automotive; Other Applications

Subsegments:

1) By Hybrid Memory Cube (HMC): HMC 1.0; HMC 2.0; HMC 3.02) By High-Bandwidth Memory (HBM): HBM1; HBM2; HBM2E; HBM3

Key Companies Mentioned: Samsung Electronics Co. Ltd; Intel Corporation; International Business Machines (IBM) Corporation; Qualcomm Incorporated; SK Hynix Inc.

Countries: Australia; Brazil; China; France; Germany; India; Indonesia; Japan; Russia; South Korea; UK; USA; Canada; Italy; Spain

Regions: Asia-Pacific; Western Europe; Eastern Europe; North America; South America; Middle East; Africa

Time Series: Five years historic and ten years forecast.

Data: Ratios of market size and growth to related markets, GDP proportions, expenditure per capita.

Data Segmentation: Country and regional historic and forecast data, market share of competitors, market segments.

Sourcing and Referencing: Data and analysis throughout the report is sourced using end notes.

Delivery Format: PDF, Word and Excel Data Dashboard.

Companies Mentioned

Some of the major companies featured in this High Bandwidth Memory (HBM) market report include:- Samsung Electronics Co. Ltd

- Intel Corporation

- International Business Machines (IBM) Corporation

- Qualcomm Incorporated

- SK Hynix Inc.

- Fujitsu Limited

- Micron Technology Inc.

- Nvidia Corporation

- Toshiba Corporation

- Advanced Micro Devices Inc.

- Western Digital Corporation

- STMicroelectronics SA

- Renesas Electronics Corporation

- Powerchip Technology Corporation

- Cypress Semiconductor Corporation

- Nanya Technology Corporation

- Macronix International Co., Ltd.

- Silicon Motion Technology Corporation

- Transcend Information Inc.

- Integrated Silicon Solution Inc. (ISSI)

- Adata Technology Co. Ltd.

- Netlist Inc.

- Open Silicon Inc.

- Micronet Ltd.

- Winbond Electronics Corporation

Table Information

| Report Attribute | Details |

|---|---|

| No. of Pages | 200 |

| Published | March 2025 |

| Forecast Period | 2025 - 2029 |

| Estimated Market Value ( USD | $ 3 Billion |

| Forecasted Market Value ( USD | $ 7.78 Billion |

| Compound Annual Growth Rate | 26.8% |

| Regions Covered | Global |

| No. of Companies Mentioned | 26 |