Speak directly to the analyst to clarify any post sales queries you may have.

Exploring the Emergence and Impact of AI-Powered Speech Synthesis Technologies That Are Redefining Voice Interactions and Communication Experiences Across Diverse Sectors

AI-powered speech synthesis stands at the forefront of a revolution in human-computer interaction, transforming static text into rich, natural-sounding voice experiences. Initially constrained by rudimentary rule-based systems, the field has expanded through successive wave of neural architecture breakthroughs, propelling quality and intelligibility to near human parity. As a result, voice-driven applications have become integral in sectors ranging from accessibility services to entertainment, reinforcing the importance of lifelike speech in fostering user engagement.Moreover, the confluence of deep learning advancements and increased computational power has democratized the deployment of speech synthesis solutions across devices and platforms. Consequently, organizations are able to deliver multilingual support, dynamic voice modulation, and real-time responses at scale. Transitioning from proof-of-concept to mainstream adoption, this technology now underpins virtual assistants, audiobook narration, and voice cloning for creative production.

Furthermore, the growing emphasis on personalization, driven by consumer demand for tailored experiences, has accelerated investment in prosody control and emotional expression models. As enterprises seek to differentiate offerings, the ability to craft distinct vocal identities has emerged as a strategic asset. Ultimately, these developments mark the beginning of a new era in voice-enabled interfaces, setting the stage for further innovation and market expansion.

Uncovering the Transformative Technological Breakthroughs and Architecture Evolution Driving Adoption in AI-Enhanced Speech Synthesis Ecosystems

The landscape of AI-driven speech synthesis has undergone transformative shifts, shaped by pioneering research and industrial-scale deployments. Early methods, rooted in concatenative and parametric frameworks, have given way to neural text-to-speech systems that leverage large-scale training sets and deep sequence modeling. Consequently, the fidelity of generated voices has increased dramatically, closing the gap between synthetic and human speech.In parallel, advancements in formant synthesis have complemented neural approaches by offering lightweight models suitable for low-resource environments. These hybrid strategies enable deployment on edge devices, empowering applications where connectivity is limited or latency must be minimized. Moreover, the integration of transfer learning techniques has accelerated language expansion, making regional dialects and less common tongues more accessible.

Additionally, the industry has witnessed a shift towards cloud-based delivery models that facilitate rapid updates and centralized management, while on-premise solutions continue to be adopted where data privacy and compliance requirements are paramount. Taken together, these technological breakthroughs and architectural refinements have broadened the scope of viable use cases, driving adoption in customer service, assistive technology, gaming, and beyond.

Examining the Cumulative Impact of United States Tariff Policies on AI-Powered Speech Technology Supply Chains and Market Dynamics

The imposition of updated United States tariff policies has introduced a layer of complexity for stakeholders in the AI speech synthesis supply chain. With duties applied to imported semiconductors and computing hardware, organizations face elevated costs for processing units critical to model training and inference tasks. As these components represent a substantial portion of infrastructure expenditures, the escalation in import levies has reshaped budgeting considerations.In response, many solution providers have reevaluated their sourcing strategies, exploring partnerships with domestic manufacturers and diversifying supplier portfolios. Consequently, the industry has observed a resurgence in local fabrication initiatives and collaborative research efforts aimed at reducing dependence on single-source imports. Moreover, enterprises are increasingly integrating optimized algorithms that reduce computational overhead, thereby mitigating the financial impact of hardware tariffs.

Furthermore, end users seeking on-premise deployments have become more selective in hardware refresh cycles, prioritizing stability and long-term support contracts. Transitioning to subscription-based cloud offerings has also gained traction as a flexible alternative, although concerns regarding data sovereignty and regulatory compliance remain influential. Ultimately, these tariff-driven dynamics are prompting a strategic realignment of procurement, deployment, and research priorities across the AI speech synthesis community.

Illuminating How Component Design Services Software Voice Type Variations Deployment Preferences Applications and End Users Converge to Shape the AI Speech Synthesis Market

Detailed segmentation analysis reveals that the AI-powered speech synthesis market is influenced by the nature of the underlying solution, where software platforms compete alongside service offerings for design, customization, and managed deployment. Voice type distinctions further shape strategic investments: while concatenative speech synthesis and formant-based approaches maintain relevance in legacy systems, neural text-to-speech models are enjoying widespread momentum, and parametric techniques are leveraged for small-footprint environments.Simultaneously, deployment preferences oscillate between cloud-based solutions-favored for scalability and continuous integration-and on-premise implementations that address stringent data protection mandates. In terms of application, speech synthesis is gaining footholds within accessibility solutions and assistive technologies, while content creation ecosystems embrace it for audiobook narration, podcasting, and multimedia dubbing. Customer service and call center automation continue to represent a prominent use case, with conversational chatbots and virtual assistants extending the boundaries of user engagement. The gaming and animation industry exploits dynamic voice rendering to enrich storytelling, and voice cloning applications promise personalized interactions in branding and marketing contexts.

Lastly, end-user segmentation underscores adoption across automotive infotainment systems, BFSI client services, education and e-learning platforms, government defense communications, healthcare patient engagement tools, IT and telecom voice portals, media and entertainment production workflows, and retail e-commerce voice assistants. Together, these layers of segmentation offer a holistic perspective on where resources, innovation, and market development are most intensively concentrated.

Revealing How Regional Innovation Investments Infrastructure and Regulatory Environments Drive Distinct Adoption Patterns in the Americas Europe Middle East Africa and Asia Pacific

Regional dynamics highlight distinct patterns across key territories. In the Americas, robust R&D ecosystems and extensive cloud infrastructure investments support rapid innovation cycles, especially within North America's technology hubs. Latin America is emerging as a testbed for voice-enabled accessibility projects, driven by increasing digital inclusion initiatives and language diversity requirements.Conversely, the Europe Middle East Africa region benefits from harmonized regulatory frameworks that emphasize data privacy and ethical AI use, prompting providers to develop privacy-centric architectures and transparent model governance. Europe's emphasis on multilingual capabilities has also stimulated localized voice offerings, while Middle Eastern and African markets are witnessing growth in education technology applications tailored to regional dialects.

Asia Pacific stands out for its dynamic adoption rates, fueled by large-scale government digitalization programs and a thriving telecommunications sector. Cloud-native platforms and edge computing initiatives are proliferating, enabling real-time speech synthesis in smart city frameworks and consumer electronics. As emerging markets invest in infrastructure modernization, the demand for voice-driven customer engagement and e-learning solutions continues to strengthen. Across all regions, collaborative public-private partnerships and localized content strategies remain key drivers of sustained market expansion.

Highlighting How Strategic Partnerships Technology Specialization and Collaborative Innovation Are Shaping Competitive Positioning in the AI Speech Synthesis Industry

Leading stakeholders in AI-powered speech synthesis are differentiating through specialization, partnerships, and continuous innovation. Established technology firms focus on integrating speech synthesis capabilities into broader AI suites, leveraging existing cloud platforms and developer ecosystems. These companies emphasize scalability and reliability, addressing enterprise concerns around uptime and support.Simultaneously, agile start-ups are carving niches by advancing neural voice cloning, emotional prosody control, and fine-grained customization tools. By open-sourcing components and fostering developer communities, they accelerate model refinement and broaden technology access. Strategic collaborations between hardware manufacturers and software specialists are also on the rise, resulting in optimized inference engines that deliver high-fidelity speech with reduced latency on edge devices.

Moreover, original equipment manufacturers in sectors such as automotive and consumer electronics are forging alliances to embed voice synthesis modules directly into end-user products. These partnerships facilitate co-development of multilingual voice packs and context-aware interaction flows. As competitive positioning intensifies, companies are investing in patent portfolios and cross-licensing agreements to secure technological leadership while exploring joint ventures for global market penetration.

Strategic Roadmap for Industry Leaders to Invest in Advanced Neural Models Diversify Supply Chains Expand Application Niches and Strengthen Governance Frameworks in AI Speech Synthesis

To remain at the forefront of AI-powered speech synthesis, industry leaders should prioritize investment in state-of-the-art neural models that enhance naturalness and prosodic expression. By allocating resources to research initiatives and cross-disciplinary collaborations, organizations can accelerate the development of emotionally aware voice outputs that resonate with end users.Additionally, fostering strategic alliances with hardware providers and semiconductor manufacturers can mitigate supply chain risks associated with fluctuating tariff environments. Embedding optimization routines for low-power inference will enable deployment on edge devices, catering to market segments demanding on-device processing and offline functionality.

Furthermore, expanding application portfolios through partnerships with content creators, educational institutions, and healthcare providers will unlock new growth avenues. Tailoring solutions to domain-specific requirements and regulatory standards ensures relevance and compliance. Emphasizing modular architectures and API-driven integration will enhance interoperability, facilitating seamless adoption across enterprise IT landscapes.

Finally, establishing robust governance frameworks around data privacy, ethical usage, and model transparency will build stakeholder trust and support sustainable adoption. By proactively addressing compliance considerations, organizations can differentiate their offerings and establish long-term competitive advantage.

Detailed Overview of Rigorous Multi Phase Research Methodology Incorporating Primary Interviews Secondary Intelligence Data Triangulation and Quantitative Analysis

The foundation of this analysis is a rigorous, multi-phase research methodology designed to ensure accuracy, relevance, and comprehensiveness. Primary research involved in-depth interviews with executives, product architects, and application engineers across technology vendors, end-user enterprises, and system integrators. These conversations provided qualitative insights into adoption drivers, deployment experiences, and future development priorities.Supplementing primary interviews, extensive secondary research was conducted through peer-reviewed journals, industry white papers, technical filings, and regulatory publications. Data triangulation was employed to reconcile divergent viewpoints, validate assumptions, and refine segmentation parameters. Market trends were further corroborated by analyzing patent filings, funding announcements, and partnership disclosures.

Quantitative analysis leveraged structured data sets covering solution deployments, technology performance benchmarks, and user satisfaction metrics. Advanced analytical techniques, including scenario modeling and sensitivity testing, were applied to assess the impact of variables such as tariff changes and regional infrastructure investments. Throughout the process, iterative reviews by subject matter experts ensured alignment with industry realities and mitigated potential biases.

Synthesizing Key Findings and Forward Looking Perspectives That Define the Future Trajectory of AI Enhanced Speech Synthesis Adoption Innovation and Strategic Differentiation

In summary, AI-powered speech synthesis has evolved from experimental prototypes to a critical enabler of voice-driven interaction across industries. Technological innovations in neural modeling, prosody control, and edge optimization are unlocking new possibilities in accessibility, content creation, and customer engagement. Concurrently, regulatory shifts and tariff policies are reshaping supply chains and strategic priorities, prompting stakeholders to adopt flexible sourcing and modular design principles.Segmentation analysis highlights the interplay between software and service offerings, voice technology variants, deployment preferences, and diverse application areas, underscoring the importance of tailored solutions. Regional insights illustrate how infrastructure, regulatory frameworks, and digitalization programs drive varied adoption trajectories across the Americas, Europe Middle East Africa, and Asia Pacific.

As competition intensifies, companies are pursuing strategic partnerships, intellectual property strategies, and targeted innovation to secure leadership positions. Actionable recommendations emphasize investment in advanced neural architectures, supply chain diversification, application expansion, and governance frameworks. Together, these pathways define a roadmap for sustainable growth and long-term value creation in the evolving landscape of AI-enhanced speech synthesis.

Market Segmentation & Coverage

This research report forecasts revenues and analyzes trends in each of the following sub-segmentations:- Component

- Services

- Software

- Voice Type

- Concatenative Speech Synthesis

- Formant Synthesis

- Neural Text-to-Speech (NTTS)

- Parametric Speech Synthesis

- Deployment Mode

- Cloud-Based

- On-Premise

- Application

- Accessibility Solutions

- Assistive Technologies

- Audiobook & Podcast Generation

- Content Creation & Dubbing

- Customer Service & Call Centers

- Gaming & Animation

- Virtual Assistants & Chatbots

- Voice Cloning

- End-User

- Automotive

- BFSI

- Education & E-learning

- Government & Defense

- Healthcare

- IT & Telecom

- Media & Entertainment

- Retail & E-commerce

- Americas

- North America

- United States

- Canada

- Mexico

- Latin America

- Brazil

- Argentina

- Chile

- Colombia

- Peru

- North America

- Europe, Middle East & Africa

- Europe

- United Kingdom

- Germany

- France

- Russia

- Italy

- Spain

- Netherlands

- Sweden

- Poland

- Switzerland

- Middle East

- United Arab Emirates

- Saudi Arabia

- Qatar

- Turkey

- Israel

- Africa

- South Africa

- Nigeria

- Egypt

- Kenya

- Europe

- Asia-Pacific

- China

- India

- Japan

- Australia

- South Korea

- Indonesia

- Thailand

- Malaysia

- Singapore

- Taiwan

- Acapela Group SA

- Acolad Group

- Altered, Inc.

- Amazon Web Services, Inc.

- Baidu, Inc.

- BeyondWords Inc.

- CereProc Limited

- Descript, Inc.

- Eleven Labs, Inc.

- International Business Machines Corporation

- iSpeech, Inc.

- IZEA Worldwide, Inc.

- LOVO Inc.

- Microsoft Corporation

- MURF Group

- Neuphonic

- Nuance Communications, Inc.

- ReadSpeaker AB

- Replica Studios Pty Ltd.

- Sonantic Ltd.

- Synthesia Limited

- Verint Systems Inc.

- VocaliD, Inc.

- Voxygen S.A.

- WellSaid Labs, Inc.

Table of Contents

3. Executive Summary

4. Market Overview

7. Cumulative Impact of Artificial Intelligence 2025

Companies Mentioned

The companies profiled in this AI-Powered Speech Synthesis market report include:- Acapela Group SA

- Acolad Group

- Altered, Inc.

- Amazon Web Services, Inc.

- Baidu, Inc.

- BeyondWords Inc.

- CereProc Limited

- Descript, Inc.

- Eleven Labs, Inc.

- International Business Machines Corporation

- iSpeech, Inc.

- IZEA Worldwide, Inc.

- LOVO Inc.

- Microsoft Corporation

- MURF Group

- Neuphonic

- Nuance Communications, Inc.

- ReadSpeaker AB

- Replica Studios Pty Ltd.

- Sonantic Ltd.

- Synthesia Limited

- Verint Systems Inc.

- VocaliD, Inc.

- Voxygen S.A.

- WellSaid Labs, Inc.

Table Information

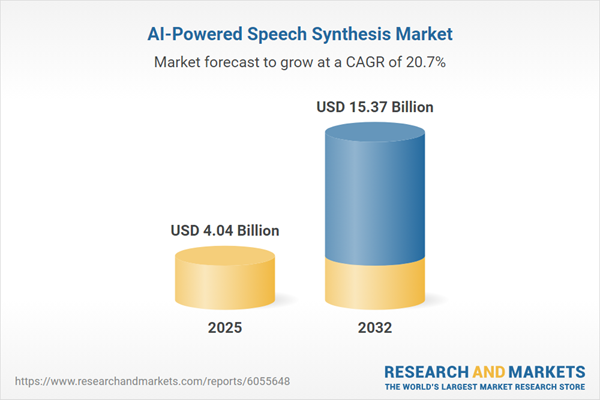

| Report Attribute | Details |

|---|---|

| No. of Pages | 185 |

| Published | November 2025 |

| Forecast Period | 2025 - 2032 |

| Estimated Market Value ( USD | $ 4.04 Billion |

| Forecasted Market Value ( USD | $ 15.37 Billion |

| Compound Annual Growth Rate | 20.7% |

| Regions Covered | Global |

| No. of Companies Mentioned | 26 |